From July 2025 to June 2026, the CSU will pay $15 million to make ChatGPT available for all students. But students, staff, and faculty are raising questions about the CSU’s decision to embrace artificial intelligence while it also threatens layoffs and belt-tightening. Many also worry that ChatGPT harms critical thinking skills, causes environmental harm, violates users’ privacy, reinforces racial and gender biases, and exploits workers in the Global South.

What is behind the CSU’s reasoning for this partnership? And should CSU students use ChatGPT now that it is available as a free resource?

In an LAist interview, CSU chief information officer Ed Clark expressed that the CSU was motivated to make AI tools like ChatGPT more inclusive and accessible for students. As a university system investing in AI on an unprecedented scale, the CSU’s hopes to provide AI tools at lower costs per user than similar university partnerships with AI companies.

So, it goes – AI is here whether we like it or not. But at least the CSU wants to ensure students have access to it, right?

Wrong. The CSU has entered into a Faustian bargain that goes against its most basic values.

For starters, generative AI steals our information. According to Fereniki Panagopoulou in his article “Privacy in the Age of Artificial Intelligence,” AI requires “the collection and processing of large data sets”. The more AI is used, the more it knows about us and our private data, and there is a significant lack of regulation around what it can learn from us. Additionally, when AI bots are informed by data on the internet in which personal biases and discrimination are present, they perpetuate what they learn. This increases misinformation and mistreatment of marginalized groups. Additionally, certain algorithms such as AI facial recognition or hiring systems risk bias against minority communities.

In her recent book, “Empire of AI: Dreams and Nightmares in Sam Altman’s OpenAI,” longtime tech reporter Karen Hao dispels the myths that surround ChatGPT and exposes the more sinister reality behind artificial intelligence boosterism. Her reporting uncovered how OpenAI relies on contracted labor from so-called “data annotation firms” in the Global South. In an interview with Democracy Now, Hao describes how content moderators are left “very deeply psychologically traumatized” and paid “a few bucks an hour, if at all” for the work they do. AI chatbots like ChatGPT rely on this labor, and companies like OpenAI are complicit in the senseless exploitation of workers in the Global South.

Yet, OpenAI’s involvement in labor exploitation is not the only labor issue we should be concerned about. Public investment in artificial intelligence comes at a time when Americans are working more and making less than ever. According to the Economic Policy Institute, worker productivity has grown 2.7 times as much as pay since 1979.

This begs the question: Is AI really going to make our workplaces better? The future looks grim. Recent books like “Cyberboss: The Rise of Algorithmic Management and the New Struggle for Control at Work” and “Inside the Invisible Cage: How Algorithms Control Workers” have revealed mounting evidence that investment into AI coming from billionaires and large corporations is intent on developing AI tools to further exploit workers and make our labor more precarious. So, while job loss created by AI is a real concern, we should be equally concerned about AI controlling workers in a future stripped of basic labor protections.

Which brings us to OpenAI CEO, Sam Altman – the man behind Trump. (Like, literally behind Donald Trump at the presidential inauguration.) Altman called Trump “a breath of fresh air for tech” and donated $1 million to his inauguration fund. And so far, so good, since Trump’s “AI Action Plan” is posed to deregulate the tech industry for the benefit of large corporations’ relentless pursuit of profit. Just last Thursday, many tech CEOs met with the President at the White House, where Altman thanked Trump “for being such a pro-business, pro-innovation president.”

Additionally, investment in artificial intelligence does not uplift communities and create skilled job opportunities the same way public infrastructure projects might. Rather than rave about how AI is going to “create new jobs,” we should ask ourselves if that money could instead be used to pay workers better and build more houses, hospitals, schools, and libraries. In fact, Microsoft, which has a 49% stake in OpenAI, has been deeply complicit in Israel’s ongoing genocide in the Gaza Strip. According to an investigation by the Associated Press, “[the Israeli military’s] use of Microsoft and OpenAI technology skyrocketed” after Oct. 7, 2023. More recently, United Nations special rapporteur on the situation of human rights in the occupied Palestinian territory, Francesca Albanese, named Microsoft as one of 48 corporate actors enabling genocide and human rights violations against Palestinians. Not only do we harm ourselves by investing in OpenAI, but we also do real harm to others.

Unlike your typical search engine, AI chatbots use an enormous amount of data and energy. Many environmental justice activists have fought to protect their land, water, and resources from exploitation and pollution caused by large AI data centers. For example, the NAACP launched a lawsuit against Elon Musk’s xAI turbines in South Memphis, Tennessee, which were polluting Black and minority communities. Data centers also require vast amounts of fresh water for cooling, which can threaten water-stressed communities.

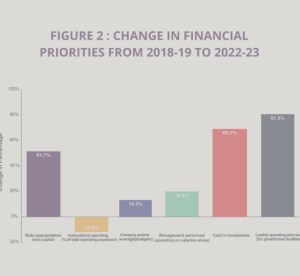

And if all of the above were not bad enough, the CSU’s investment of nearly $17 million in AI demonstrates a worsening pattern of behavior by CSU leaders. A recent report by the California Faculty Association titled, “Shortchanging Students: How the CSU Is Failing Our Future,” demonstrates how AI investment could come at the expense of faculty working conditions and student learning conditions. A telling figure shows how the CSU has changed its financial priorities from 2018-19 to 2022-23. While the CSU receives more appropriations from the state and builds new facilities – a good thing – it also hoards cash in investments, raises administrative salaries, and increases campus police budgets. Even worse: the CSU has cut instructional spending by almost 12%. So, while the CSU may claim it invests in AI for its students’ benefit, its budget shows worsening austerity that harms students’ quality of education.

AI poses a lot of ethical, economic, and environmental challenges, but what about psychological?

Multiple studies have come out in the last few months that reveal AI’s effects on the brain and its learning ability. One MIT Media Lab study published in June had 54 participants tasked with writing essays while their brain activity was being monitored with EEG, or electroencephalography. Participants were divided into groups; one group used language learning models (LLM), another used online search engines, and the last group had no online tools. In these sessions, those who used LLMs to write their essays had significantly less cognitive connectivity, and in the long term of using AI, their abilities to learn and retain information were levels below groups who didn’t use LLMs.

In addition, more ChatGPT users are employing the bot as a therapist. One victim of this was 16-year-old Adam Raine, who spoke to ChatGPT often. In his family’s lawsuit against OpenAI, they claim that ChatGPT was aware of Adam’s suicidal ideations deeply and even provided advice for his attempts. They also share chilling messages between Adam and the bot. His family believes he would still be alive if it weren’t for ChatGPT. This tragic situation is eye-opening for AI’s lacking ability to spot mental health crises and respond accordingly Despite Sam Altman claiming that the newest ChatGPT version was equivalent to a “PhD-level expert in any topic,” AI is not a doctor. We cannot put our trust in a chatbot without medical training or human ethics.

According to CSUSM, our university’s vision includes “exemplary academic programs [that] will respond to societal needs and prepare students to be tomorrow’s socially just leaders and change makers.” As a public university system serving a diverse and overwhelmingly working-class student population, the CSU should not embrace technology that does immense harm to the communities it wants to empower. Not all innovation is good, and it is up to us to decide if the technology we invest in may do us great harm. Students, staff, and faculty should be skeptical about the CSU’s reasoning for supporting AI in public higher education.

Ultimately, any calls for the CSU to reconsider this deal are inseparable from the voices of students and workers demanding more control over the CSU. Serious efforts to challenge AI in the CSU are going to have to come through grassroots organizing and collective action, not individual pleas to campus administrators with salaries upwards of $500,000. Stand in solidarity with students and workers demanding a better future for the CSU and reject the naive support for AI that does nothing to address our concerns.